Take a look at our newest merchandise

The NVIDIA GTC AI Growth convention began yesterday, however the massive bulletins are coming in quick and livid as we speak. This one is all about some highly effective AI growth {hardware}, often known as DGX Spark and DGX Station. These names come out of left discipline, however the expertise upon which they’re constructed is a identified commodity: the Grace Blackwell AI supercomputer structure, which has expanded into a number of chips.

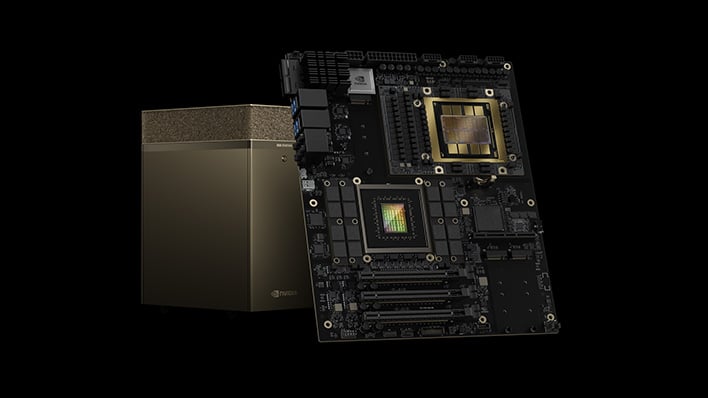

Again at CES, NVIDIA introduced Challenge DIGITS, its AI acceleration developer workstation fueled by Grace Blackwell. That is a mix of its Arm CPU design coupled with a Blackwell GPU, all backed by a whopping 128GB of reminiscence. It is highly effective, small, and laser centered on giving AI builders an appropriate workstation to do use for his or her efforts. Now, Challenge DIGITS has a brand new title: DGX Spark. That system is accompanied by a brand new behemoth primarily based on Blackwell Extremely, DGX Station.

Challenge DIGITS Turns into DGX Spark

NVIDIA says that DGX Spark is the world’s smallest AI supercomputer. Its focus it to permit knowledge scientists, AI researchers, robotics builders, and college students all the ability they should increase their fields of labor and research and develop the realm of bodily AI. NVIDIA’s Cosmos real-world basis mannequin and the GR00T robotics basis mannequin are on the coronary heart of robotics and autonomous autos, which is the place the corporate foresees the most important development over the subsequent a number of years. The concept is that growth would begin on these highly effective DGX Spark techniques and seamlessly transition to the cloud, the place the identical frameworks are powered by the corporate’s monumental H100 GPUs.

DGX Station Scales AI within the Enterprise

On prime of that, NVIDIA has additionally given every DGX Station its ConnectX-8 SuperNIC, which makes these techniques scale with 800 Gigabits per second of community bandwidth. This enables a number of DGX Stations to be networked collectively and distribute the load at very high-speeds. These techniques had been custom-built for NVIDIA’s NIM inference microsoervices and its AI Enterprise platform, which makes distributing these microservices simpler at scale.

DGX Spark and DGX Station Availability

These taken with DGX Station must wait a bit, as techniques constructed on this expertise will likely be out there from NVIDIA’s manufacturing companions later this yr. Corporations which have signed on to construct DGX Station merchandise embrace (alphabetically) ASUS, BOXX, Dell, HP, Lambda, and Supermicro.

![[Windows 11 Pro]HP 15 15.6″ FHD Business Laptop Computer, Quad Core Intel i5-1135G7 (Beats i7-1065G7), 16GB RAM, 512GB PCIe SSD, Numeric Keypad, Wi-Fi 6, Bluetooth 4.2, Type-C, Webcam, HDMI, w/Battery](https://m.media-amazon.com/images/I/71LYTzK2A8L._AC_SL1500_.jpg)

![[UPDATED 2.0] Phone mount and holder compatible with Samsung Z Fold 2 3 4 5 6 Pixel Fold or Foldable phone | bicycle, treadmill, handlebar, elliptical, stroller, rail, handle, roundbar, golf cart](https://m.media-amazon.com/images/I/51CjGlidGRL._SL1023_.jpg)